8

Platform one

June/July 2016 |

Unmanned Systems Technology

Driverless cars

Road ahead for data fusion

Chip suppliers are positioning

themselves for the transition from

advanced driver assist systems (ADAS)

to fully autonomous driverless cars as

the architecture of the vision systems

changes (writes Nick Flaherty).

“We are certainly in the early days of

autonomous driving already, and we

believe it’s a full progression from ADAS to

full autonomous operation, not a separate

category,” said Brooke Williams, business

manager of the ADAS system-on-chip

(SoC) business unit at Texas Instruments.

TI has shipped more than 15 million

SoC devices for front camera, surround

view, radar systems and sensor fusion to

15 tier one suppliers that are selling to 25

car makers for 100 separate lines.

“The building blocks are in there now,

but to enable more advanced driving,

fusion of that data needs to be done in a

very intelligent way,” Williams said.

“As things progress to autonomous

operation there will need to be a higher

level of data integration, and what we are

already seeing is the centralisation of that

edge signal processing, so we will see

dumb sensors feeding raw image data

into a central location.

“When we start to see raw data

movement, that’s when we need a lot

more signal processing or a board with

multiple SoCs. The beauty is that the

architecture for image processing and

control is heavily optimised for algorithms

that run on the edge or in the middle.

“For centralised control you need to

have a very efficient architecture, so when

we talk about front camera algorithms

they are the most challenging.

“You have to run six to ten algorithms

on a single device, and that has to be in

a very low power envelope of 2-4 W, and

very few architectures can handle that

level of processing at that power,” he said.

While TI would not comment on

the next generation devices for this,

ST Microelectronics has extended its

relationship with Israeli chip designer

Mobileye for exactly this application.

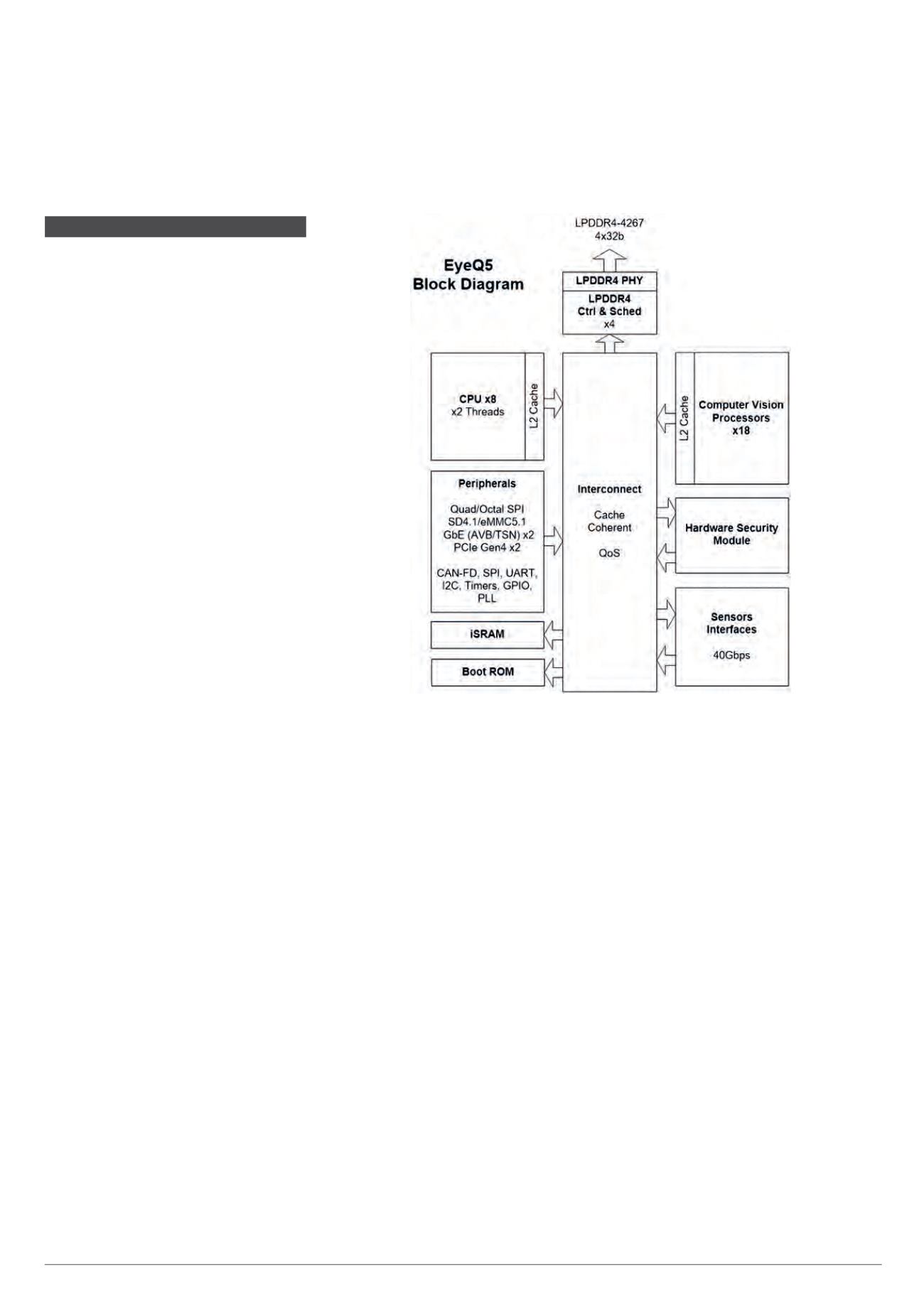

The two are working on the fifth-

generation EyeQ5 SoC as the central

computer for performing sensor fusion

for fully autonomous driving vehicles.

The chip will be designed in advanced

10 nm or below FinFET silicon technology

with eight multi-threaded CPU cores

coupled with 18 cores of Mobileye’s next-

generation vision processors.

This will provide eight times the

performance of current devices,

processing 12 Tflops with a power

consumption of less than 5 W.

Engineering samples of EyeQ5 are

expected to be available by the first half

of 2018, with development boards in the

second half for running traditional computer

vision but also neural network algorithms.

“EyeQ5 is designed to serve as

the central processor for future fully

autonomous driving for both the

sheer computing density – it can

handle around 20 high-resolution

sensors – and for increased functional

safety,” said Prof Amnon Shashua, co-

founder, CTO and chairman of Mobileye.

“This continues the development

Mobileye began in 2004 with EyeQ1,

with optimised architectures to support

intensive computations at power levels

below 5 W to allow passive cooling in an

automotive environment.”

Mobileye is also adding a hardware

security module to the chip so that

system integrators can support over-

the-air software updates and secure

in-vehicle communications. Creating the

root of trust is based on a secure boot

from an encrypted storage device.

The data interface is also vital, and

EyeQ5 will support at least 40 Gbit/s

through two PCIe Gen4 ports for inter-

processor comms with other chips.

The EyeQ5 SoC is designed to be the central processor for fully autonomous driving